How to optimize AWS S3 storage costs with simple bucket analytics

Development, resilience, security. All have greater priority than cost management. Yet, if costs are not managed on time, it can have a significant impact on the monthly bill. We haven't been immune to this, and we have started looking at how to optimize our service usage.

AWS Cost Explorer

We are hosting most of our services on AWS. Thus we've opened the Cost Explorer and started with a simple cost analysis. Three services popped up as obvious budget drains:

RDS

S3

ECS

We have figured that it'll take more effort to optimize something on RDS and ECS, compared to S3. That's how we came to the conclusion, that we have a quick win by focusing on S3 first.

S3 cost segmentation

By tweaking the filters on cost explorer, we come up with the visualization of S3 costs. The main driving factor in storage high-cost is actually storing the data.

We store video files, so the high cost doesn't come as a surprise. Yet, it was too much.

S3 storage classes

We assumed that our objects in S3 buckets aren't in a proper storage class. For those unaware of this, S3 supports the following storage classes.

S3 Standard

S3 Intelligent-Tiering

S3 Standard IA

S3 OneZone IA

S3 Glacier

S3 Glacer Deep Archive

S3 Outposts

Each storage class has its own specific pricing model. If you want to deep dive into the details, make sure to read about it here.

To sum up:

S3 Standard has the cheapest object access and transfer prices. But, it has the most expensive storage per GB price. It is used for frequent object access on a daily basis.

S3 Standard IA is a middle ground. More expensive object access and transfer, but a bit cheaper storage price. Usually used for infrequent object access.

S3 Glacier and S3 Glacier Deep Archive are used for archiving the objects. Realtime access to objects stored in these classes is no longer possible.

We could not find out any addditional details and we had to move to S3 itself and continue with analysis.

Storage lens

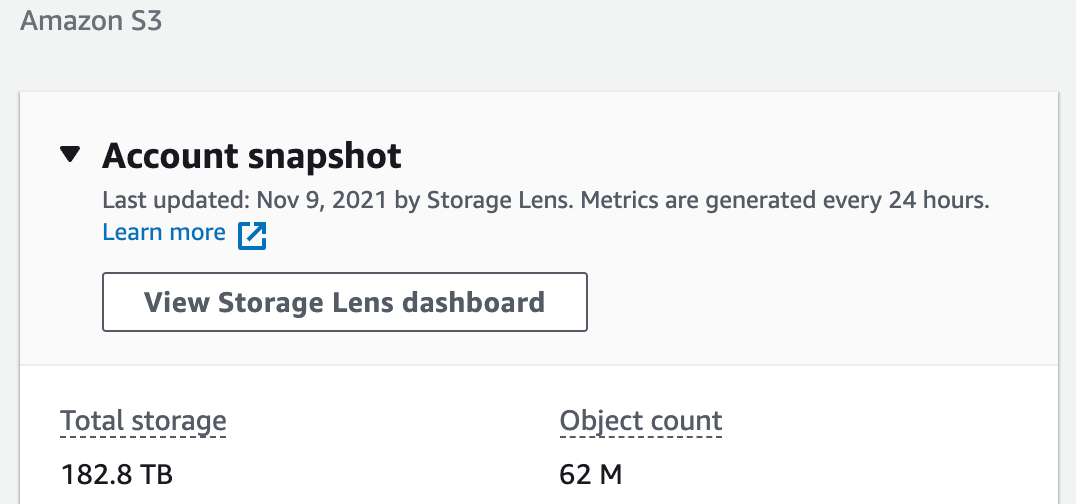

The storage lens dashboard is accessible from the S3 service homepage. Once we opened the dashboard, it was a surprise how our data grew over time. 180TB and counting.

By exploring different options in storage lens, we have discovered three outstanding buckets. 110TB, 50TB and 20TB, respectively. But that was the best we could find out at the moment. To make a proper decision on what to do with this enormous set of data, we needed deep-level bucket analytics.

Storage class analysis

To get a detailed bucket analysis, we have created a new analytics configuration. It has to be set for each bucket individually.

Open target bucket and select Metrics tab. Under the "Storage Class Analysis" section, choose "Create analytics configuration" option.

Define configuration name and choose options as on the following screenshot.

Note: Even if you don't plan to do any S3 optimizations soon, turn on bucket analytics today. You will gather valuable information throughout the year. Based on this data, you will make a final decision on how to optimize S3 costs.

Object usage analysis

Once you open the analytics file in the spreadsheet, you can see a couple of important parameters.

StorageClass

ObjectAge

Storage_MB

DataRetrieved_MB

ObjectCount

What are we looking at first? We want to know how often we access S3 objects. DataRetrieved_MB and GetRequestCount columns will tell us that. What's more, we have that information segmented by ObjectAge.

You can see example analytics for one of our buckets.

It is quite obvious here, that system is accessing objects in first couple of days of object life. After that, objects are not used anymore.

What's unfortunate for us, is that all objects are stored on the S3 Standard storage class. This one is the most expensive one for storing objects.

Optimization steps

Common sense is telling us that we have two options:

Delete all objects older than 14 days

Move all objects older than 14 days to Glacier Deep Archive

Before we validate those options, we have to look at other cost influential factors.

Transfer costs

When you're moving files from one storage class to another, you're paying a transfer fee. The most expensive destination is Glacier Deep Archive. It costs $0.05 per 1000 requests.

What you have to do then, is to look at the ObjectCount parameter and see how many objects you would have to move. That's how many transfer requests you will have. To save money in the long term, you have to pay something upfront.

Glacier meta-files

To store objects to Glacier Deep Archive, S3 creates metadata files. These files provide information on objects in deep-archive. For each transferred object to Glacier, S3 creates two more objects. One of 8KB in Standard class and one of 32KB in Glacier class.

Why is this important? If you have a huge amount of objects in the bucket, with a small object size (in KB), it is possible that you'll never have a positive break-even point for cost-saving.

It turns out that you might be spending more money on transferring the objects, than saving on storage.

In our case, that wasn't the case, and we moved on with the initial plan. We will move all files older than 15 days to the Glacier Deep Archive storage class.

How to do that?

Lifecycle rules

What's great about S3 object transfers is that you don't do it by hand. Instead, all we need is to set a lifecycle rule which moves the files automatically based on object age.

To set lifecycle rule:

Go to bucket "Management" tab

And choose an option "Create lifecycle rule"

We have set a rule action that applies to all objects in the bucket, as follows:

And that's all it takes. From now on, every object older than 15 days will transfer to Glacier Deep Archive.

More complex scenarios

The bucket we used in the above example is not that common. Usually, object access happens throughout the year instead of the first two weeks. In another bucket example, we have set the lifecycle rules as follows:

After 30 days move files from "S3 Standard" to "S3 Standard IA".

Because in the first 30 days, 80% of work on those objects completes. That's why S3 Standard Infrequent-Access fits in, as it's cheaper to store, but still provides real-time access.After 18 months move files from "S3 Standard IA" to "S3 Glacier Deep Archive".

After 18 months, the object won't be available in real-time. Someone still might ask for that file. If that happens, they will have to wait for 12h to restore it from Glacier. It's a tradeoff we made with reduced storage costs.

Result

With this simple approach, we reduced S3 costs seven times, saving ~$40k annually.